Lately I spent a considerable amount of time planning my backup stragegy. A quick primer: I don’t prefer placing a huge amount of trust on proprietary backup solutions (e.g. Apple Time Machine) or proprietary file syncing solutions (e.g. Microsoft OneDrive). The reason is, among others, that I do not want to be locked in to a single solution tied to a particular company. Although Microsoft, Google, and Apple are too big to fail, but no one can’t guarantee the longevity of services they offer.

This calls for a self-hosted solution. It does not have to be all grand, feature-complete, with all bells and whistles. With the aim to be fully (or partially?) compliant with the Unix Philosophy, it has to “do one thing and do it well”.

Having said that, we need to give proper credits to people who have developed great applications for file-syncing and backup solutions. Hence, I would like to briefly cover one application that has all the bells and whistles and I will also list some counterparts. In the second section of this entry, I would lay out my plan for having a local storage server with an off-site backup strategy.

This is my Project Lancelot: setting up a local storage server.

NextCloud

NextCloud shines as the replacement for Google Drive. For personal use, it does not need a lot of resources. Its documentation for NextCloud 12 says that it requires a minimum of 128MB RAM, but they recommend 512MB for more legroom. Translating this resource usage into monthly commitment, a user will only need to pay $5/month (US Dollar) for a 1GB virtual private server (VPS) on Digital Ocean (DO) or $2.50/month for a Vultr VPS with 512MB RAM capacity.

A 1GB DO VPS comes with 25GB (fast) SSD disk space. If latency is a concern, DO has its datacenters on (almost?) all geographic continents, for example users in Malaysia can use their Singapore datacenter. 25GB is a good size of storage for just storing office documents (Word, PowerPoint, Excel, PDF, etc.) but it could be a little too tight to store raw data generated from scientific instruments.

This is an area where NextCloud has an edge over some alternatives (as far as I know). NextCloud officially supports Amazon Simple Storage Service (AWS S3), perhaps the most popular mass object storage offering (but not necessarily the cheapest).

I have been using AWS S3 for a few years already, storing around 10GB of files online mostly from my photography side gigs. I have never paid more than $1/month. However, AWS pricing scheme (in general) is a bit confusing where it depends on the geographic region you choose to store you data, but the difference is not massive, e.g. standard storage in North Virginia is $0.023/GB data vs. $0.025/GB in Singapore.

Similar to Google Drive, NextCloud sync client can be installed on major operating systems and also available on most mobile platforms. Worth noting that NextCloud has a good amount of plugins to extend its functionality, for example there are RSS feedreader, todo app, calendar app, etc.

Thus, combining both NextCloud on a $5/month DO VPS with low-cost and virtually unlimited storage on AWS S3, this can be a really powerful setup as an offsite cloud-based file sync/backup. For a quick comparison, Google Drive offers 1TB storage at $9.99/month and Microsoft OneDrive offers its Office 365 Personal that comes with 1TB storage plus Office applications at $69.99/year.

Comparing NextCloud with similar self-hosted counterparts, there are Seafile and Pydio, among others.

NAS & B2

Going back to the philosophy “do one thing and do it well”, this is my reason not to go with NextCloud or other similar alternatives. This is just highlighting my preference that I am comfortable with, not discouraging you from trying & using NextCloud. Don’t get me wrong; NextCloud is great and I am elated that it has served many happy customers.

The decision to not go with NextCloud here is congruent with my philosophy:

If there is an absolutely demanding alternative, that alternative should gain priority. Complexity can summon misery, but lies in complexity often the beauty of modularity.

Instead of using a VPS with AWS S3 as the storage back-end, I would like to go with getting a small physical file server that is physically present in my bedroom, physically connected to my local home network, and having at least one remote backup location.

Here is the reason why and this will be a technical explanation. My internet speed here to the outside world runs at 100 Megabits/sec (download, can’t recall how fast for upload). Thus, my sync speed with, say a VPS here in New York, would be constrained to how fast I could upload & download file, typically around the neighborhood of 6-8 Megabytes/sec. Note that 1 byte is equal to 8 bits, hence 100 Megabits = 12.5 Megabytes theoretical throughput.

This is great for small files but not for large files. A typical HDD write speed on SATA bus is around 80 Megabytes/sec or faster, while for a typical SSD speed on the same bus can go higher than 600 Megabytes/sec. So here we have 80 Megabytes/sec, the slowest disk write speed vs. 12.5 Megabytes/sec internet up/download speed. I don’t have to point out which is the clear winner here, do I?

With the goal to achieve the highest throughput, I come to a conclusion that I would like to run a small storage server with HDD as the storage device. Specifically, I am choosing ROCK64 by PINE64 company (instead of Raspberry Pi3 B+) with Western Digital Red 1TB 2.5-inch 5,2000 RPM. The whole setup would cost me around $120, maybe a bit more.

Note: I was thinking to do something crazy. Something like setting up a RAID5 system with three 1TB HDDs for redundancy and resiliency. That would require me to build a mini x86-based server. However, it would make my setup to be less portable and would cost more.

So what is my specific plan to turn a ROCK64 compute board attached to a 1TB storage into a local file storage? From here I could take two different routes: going with minimal installation or using a well-known Linux distribution for running a storage server, the OpenMediaVault.

Here I would like to introduce you the terminology Network-Attached Storage (NAS). This is exactly what I am planning to build. Essentially, a NAS is a low-powered computer that is designed to be a storage server and nothing else. But of course, the term NAS is loosely-defined nowadays from being just a local storage server to run all sorts of things.

In theory, with this setup I could achieve a network transfer speed of 80 Megabytes/sec, constrained by the HDD write speed since the gigabit ethernet (GbE) adapter of the ROCK64 is usually saturated at 100 Megabytes/sec, assuming my local home network is running on gigabit connection (which it will). That’s a pretty seemless transfer operation compared to having a cloud that is constrained by my internet speed (12.5 Megabytes/sec).

It looks like this setup is promising. But, what would happen if the system failed? Or my place happened to face natural catastrophe, or just plain back luck? This calls for an off-site backup mechanism.

There are two options of the same kind here. I can go with either, or using both at the same time. I can set up a system that backs up the whole content on the HDD to AWS S3 (option 1), or backing them all up to BackBlaze B2 (option 2), or running both at the same time (both).

I am still not quite sure how I would approach this, but even when running both AWS S3 and B2 at the same time won’t cost a fortune. Essentially, what I am planning to do is to have some computer scripts to tell the operating system to initiate full system backup every X interval, e.g. every 2 days, and only push new changes.

Since I am not planning to go into too many details, this is what I have in mind for now.

note on sync-ing

Most file sync clients (OneDrive, etc.) comes with the option to run at startup (i.e. when computer starts) and performing background sync whenever it senses changes. This is great because you set it and forget it. It is bad because you set it and forget it.

Bad things can happen when you forget something. Users might not realize a sync client not syncing files anymore (sounds like “a human just forgot to breathe”). I had this similar issue in the past and fortunately I caught the error. Then I developed a general attitude toward applications that start at boot: don’t let them do that.

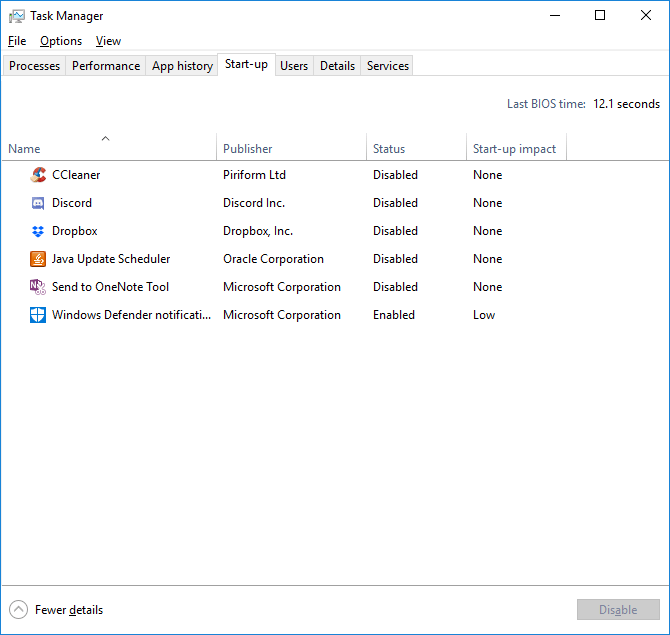

If an application asks me to start at boot, I won’t allow it to do so. I get to decide whether I want it to be running on not. Thanks to this attitude, my startup experience is usually decent where I get to use my computer 5-15 seconds after a boot-up is complete, instead of waiting for 40-80 seconds because the computer is starting up an N number of applications as well. This is a good habit to get computer running snappy.

Disable all startups, except the AV.

Disable all startups, except the AV.

Going back to sync client operation, I make it a habit to open sync clients (usually OneDrive & Dropbox) right before shutting down my computer. This has two benefits: (1) I can verify that the sync process is free from problems, (2) I can see which files have changes by looking at the sync log. It is a little tedious to do this, but I can assure you it is a good habit to keep the integrity (well, kinda) of your files.

closing words

I can’t wait to start piecing together my NAS!